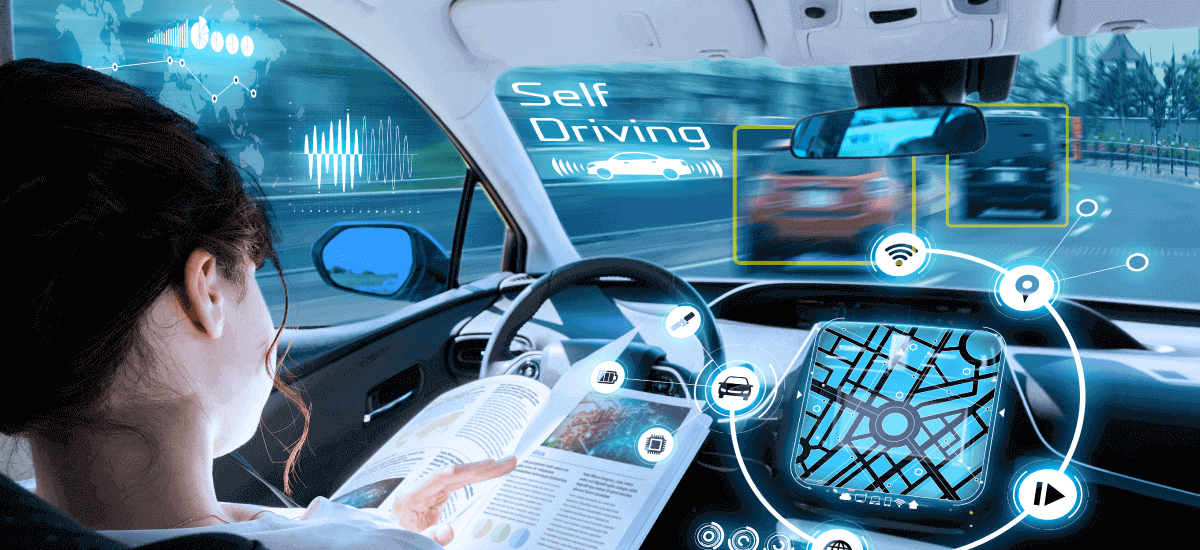

Self-driving cars, also known as autonomous vehicles (AVs), have quickly moved from science fiction to reality, thanks to significant advancements in artificial intelligence (AI), machine learning, and sensor technologies. Companies like Tesla, Waymo, and Cruise are leading the charge in developing vehicles capable of navigating roads with minimal human intervention. But what exactly powers these self-driving cars, and how do they operate safely in real-world environments?

In this article, we delve into the technology behind self-driving cars, how they work, and what you need to know about the future of autonomous driving.

1. Artificial Intelligence and Machine Learning: The Brain of Autonomous Vehicles

At the core of every self-driving car is artificial intelligence (AI). These vehicles rely on AI systems to analyze vast amounts of data in real-time and make decisions, just like a human driver. Machine learning algorithms allow AVs to recognize patterns in data, which enables them to learn from experience and improve over time.

Deep Learning for Object Detection

One of the key components of AI in self-driving cars is deep learning, a subset of machine learning. Deep learning algorithms are used to process data from sensors and cameras to detect and classify objects such as pedestrians, other vehicles, road signs, and obstacles. By continuously improving their object detection capabilities, autonomous vehicles can better understand their surroundings and make more accurate decisions.

Decision-Making and Path Planning

Once an AV has gathered data about its environment, it uses AI to decide the best course of action. Path planning algorithms help the car determine the optimal route, avoid collisions, and adhere to traffic rules. These decisions must be made in real-time, often within milliseconds, to ensure the safety of passengers and other road users.

2. Sensor Technologies: How Self-Driving Cars Perceive the World

Self-driving cars rely on a combination of sensors to gather information about their surroundings. These sensors, which include LiDAR, radar, cameras, and ultrasonic sensors, work together to create a comprehensive picture of the vehicle’s environment, much like a human uses their senses to drive.

LiDAR: Light Detection and Ranging

LiDAR is one of the most crucial technologies in autonomous driving. It uses lasers to create high-resolution, 3D maps of the environment by measuring the time it takes for light pulses to bounce off surrounding objects. This allows self-driving cars to accurately detect objects, measure distances, and navigate even in low-light conditions. Waymo, one of the leaders in self-driving technology, heavily relies on LiDAR for its autonomous vehicles.

Radar: Long-Range Sensing

Radar is another critical sensor that uses radio waves to detect objects and measure their speed and distance. Radar is particularly effective in adverse weather conditions, such as rain, fog, or snow, where visibility may be compromised. It is commonly used for functions like adaptive cruise control and collision avoidance.

Cameras: Visual Perception

Self-driving cars are equipped with multiple cameras that provide visual information about the vehicle’s surroundings. Cameras are essential for recognizing traffic signs, lane markings, and signals. Some systems use stereo cameras that capture images from multiple angles to estimate depth and distance, similar to how human eyes work.

Ultrasonic Sensors: Close-Range Detection

Ultrasonic sensors are used for detecting nearby objects and are particularly useful during parking maneuvers. These sensors emit sound waves that bounce off objects, providing real-time data about the vehicle’s immediate surroundings, such as curbs or other parked cars.

3. Connectivity: Communicating with the Outside World

Connectivity is another key element in the technology stack of self-driving cars. Vehicle-to-Everything (V2X) communication enables AVs to exchange information with other vehicles, infrastructure, and networks, enhancing safety and efficiency.

Vehicle-to-Vehicle (V2V) Communication

With V2V communication, self-driving cars can share information about their position, speed, and direction with other nearby vehicles. This reduces the risk of accidents by enabling cars to anticipate and react to each other’s movements, especially in complex situations like intersections or merging lanes.

Vehicle-to-Infrastructure (V2I) Communication

V2I communication allows self-driving cars to communicate with infrastructure like traffic lights, road signs, and parking facilities. For example, a car could receive data from a smart traffic light indicating how long it will remain red, enabling the vehicle to adjust its speed or reroute to avoid congestion.

Cloud-Based Connectivity

Many autonomous vehicles are connected to cloud systems, where they can access real-time data such as traffic conditions, weather reports, and map updates. Cloud-based connectivity also allows manufacturers to update software remotely, ensuring that the vehicle is always operating with the latest features and safety enhancements.

4. Mapping and Localization: Knowing Where You Are

To navigate accurately, self-driving cars need to know their precise location on the road. This is achieved through high-definition (HD) maps and localization technologies.

HD Maps for Precision Navigation

Unlike standard GPS maps, HD maps used by self-driving cars are incredibly detailed and include information about road curvature, lane markings, traffic lights, and road signs. These maps help AVs understand the environment in a highly precise manner. Companies like HERE Technologies and TomTom are at the forefront of creating HD maps for autonomous vehicles.

GPS and Inertial Measurement Units (IMUs)

Self-driving cars use GPS and inertial measurement units (IMUs) to track their location. GPS provides geospatial data, while IMUs use accelerometers and gyroscopes to measure the vehicle’s movement. Together, these technologies ensure that the vehicle knows its position at all times, even when GPS signals are weak, such as in tunnels or urban areas with tall buildings.

5. Autonomous Driving Levels: Understanding the Spectrum of Automation

Autonomous vehicles are categorized into different levels of automation, ranging from Level 0 (no automation) to Level 5 (full automation).

Level 1-2: Partial Automation

At Level 1 and Level 2, vehicles can assist with specific driving tasks such as lane keeping and adaptive cruise control, but the driver is still required to remain engaged and ready to take control at any time. Tesla’s Autopilot is an example of Level 2 automation, where the car can steer, accelerate, and brake, but a human driver must monitor the system.

Level 3: Conditional Automation

In Level 3, the vehicle can handle most driving tasks under specific conditions, such as on highways. However, the driver must be ready to intervene if the system encounters a situation it cannot handle. Audi has developed Level 3 systems that allow drivers to take their hands off the wheel during highway driving, but the system alerts the driver when human input is required.

Level 4-5: High and Full Automation

Level 4 and Level 5 vehicles are capable of full automation. Level 4 AVs can operate autonomously in predefined conditions, such as in certain cities or on designated highways. In contrast, Level 5 vehicles are fully autonomous and can handle all driving tasks in any environment, with no need for a human driver. Waymo and Cruise are working towards developing fully autonomous Level 5 vehicles that could revolutionize transportation.

6. Safety Challenges and Ethical Considerations

While the technology behind self-driving cars is advancing rapidly, there are still significant challenges to overcome, particularly related to safety and ethics.

Sensor Fusion and Redundancy

For self-driving cars to operate safely, data from multiple sensors must be combined and analyzed—a process known as sensor fusion. Ensuring that each sensor works together and has built-in redundancy is critical. If one sensor fails, another must be able to take over to prevent accidents.

Ethical Decision-Making

Self-driving cars must also be programmed to make difficult ethical decisions in emergency situations. For example, if a collision is unavoidable, should the car prioritize the safety of its passengers or pedestrians? Addressing these ethical dilemmas is a major challenge for developers of autonomous systems.

Conclusion: The Road Ahead for Self-Driving Cars

The technology behind self-driving cars is complex, combining advancements in AI, sensors, connectivity, and mapping. While fully autonomous vehicles are not yet widespread, the rapid progress in autonomous driving technology suggests that self-driving cars will soon become a common sight on our roads. As these technologies continue to evolve, they promise to improve road safety, reduce traffic congestion, and transform the way we travel.